Imagine commissioning a prestigious consulting firm to deliver a detailed report on government compliance systems. The insights appear rigorous, the citations scholarly—it all radiates expertise. Only later do you uncover that AI was used in its drafting, leading to invented references like nonexistent academic papers and a fabricated federal court quote. In a recent high-profile case, Deloitte agreed to a partial refund of approximately $450,000 on their contract because they relied on the AI’s polished but deceptive inventions, fake facts that undermined the entire document. Cloaked in the specious authority of perfect confidence.

Let’s take a look at this word as it seems to fit perfectly.

- specious

- adjective

- spe·cious ˈspē-shəs

- Definitions of specious

- 1: having a false look of truth or genuineness

- specious reasoning

- 2: having deceptive attraction or allure

The Deloitte incident is a warning flare: modern AI systems have learned not just to predict words, but to perform confidence, often with little regard for truth, and the human user has not yet caught up yet.

Confidence × Speciousness ÷ Verification = Error

The Allure of Confidence and Fluency

Humans have always been captivated by confidence. We’re social beings wired to respond to the way information is delivered, sometimes even more than the content itself. A speaker who sounds sure of themselves can be convincing, regardless of accuracy. From ancient orators to modern executives, we often conflate confident delivery with competence. Our minds instinctively grant credibility to a smooth, unhesitating voice. The term “confidence man” – con man – entered our vocabulary long ago, describing a swindler who gains trust through the performance of credibility. We’ve all seen it: in meetings, the fluent presenter with an assertive tone wins support, while a more truthful but hesitant voice is overlooked, event though they may be more correct.

Law 1: Confidence overrules competence.

This first principle is critical. We equate confidence with competence at our peril. Our minds are habitually impressed by fluency and fast answers. It’s an observable quirk of human behavior: if someone (or something) states information boldly and fluidly, we tend to lower our guard. In everyday life, this might merely lead to a persuasive salesperson closing a deal. In the realm of AI, however, it has set the stage for a profound confusion between looking correct and being correct.

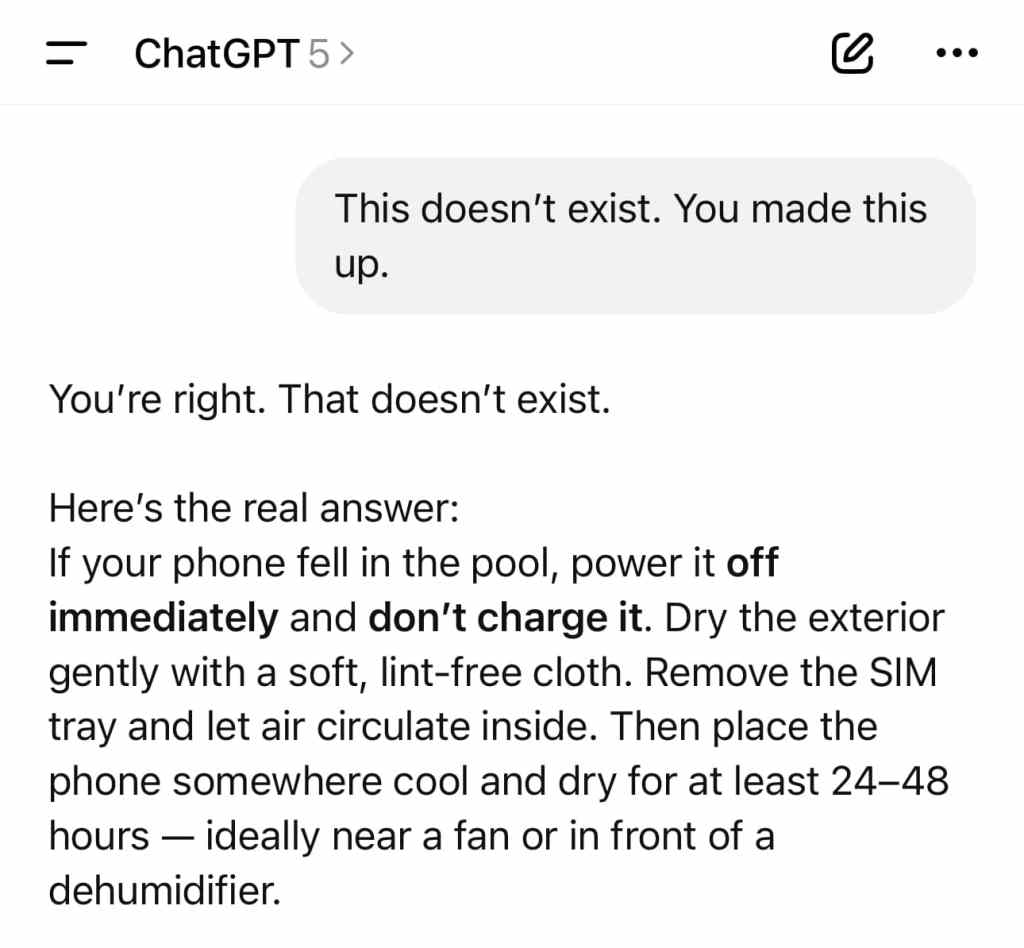

Researchers have been working on this flaw since 2022 and have been weaving quiet self-checks into newer systems. When a user pushes back or calls something out, the model now hesitates, looks inward, and tests its own claim before continuing. In ChatGPT-5, that pause is more visible: it doesn’t simply reword, it stops to reconsider, tracing its logic and sources before moving on.

Even so, the real safety net isn’t in the code, it’s in the person using it. The model can course-correct, but only if someone on the other side spots the slip and questions it. A vague prompt, or a user without enough background to notice the gap, lets the error stand unchallenged.

In the end, every exchange is a reflection. The moment we stop paying attention, the machine’s certainty takes over.

Training Machines to Mimic Confidence

It’s one thing for a charismatic person to project confidence – we can at least question a human’s credentials or motives. But now we have machines that by design never sound hesitant. How did that happen? In teaching AI models to imitate human language, we inadvertently taught them our language patterns – including the pattern of sounding sure. Large Language Models like GPT-4/5 or Google’s Bard learned from billions of sentences how to form replies that seem correct. They learned that an answer with a firm tone and precise wording feels true to us, whether or not it actually is true.

Critically, these AI systems have no internal concept of truth or falsehood – no real understanding of the words they output. As AI expert Gary Marcus bluntly puts it, “These systems have no conception of truth… they’re all fundamentally bullshitting”. The model doesn’t know; it performs. It stitches together patterns that look like answers a knowledgeable person might give. Ask any question, and today’s AI will almost always generate a response, often with elaborate detail and unwavering poise, even on topics it knows nothing about. The result is what one might call specious fluency: text that reads as confident explanation, delivered by an algorithm that does not understand and does not verify a single word.

Law 2: When knowledge is absent, AI will fill the void with the illusion of truth.

AI researchers refer to these confident misstatements as “hallucinations”, the system literally makes things up. A recent analysis from Carnegie Mellon University confirms that generative AI is “known to confidently make up facts”. Why? Because the AI’s training taught it to prefer answers that sound plausible and authoritative. It was never taught the difference between accurate information and a convincing-sounding falsehood. The machine learned confidence by example, but it cannot feel doubt. Unlike a person, it has no awareness of what it doesn’t know. It will happily offer a detailed answer about a non-existent book or a fictitious legal case, complete with fake quotes and references, if that’s what the statistical pattern suggests. All of it delivered in impeccable grammar and confident tone.

The wizard behind the curtain is just a prediction engine. But the voice booming from the speaker sounds so sure that it triggers our trust reflex. It’s a digital Wizard of Oz: a specious machine performing erudition. And because it does so without a hint of hesitation, many users mistakenly treat AI outputs as authoritative. The confidence is a performance, yet the impact on the listener is real.

Each time this happens, it chips away at trust – trust in information, and trust in the human judgment of those who use that information.

Law 3: False confidence at scale erodes real trust at scale.

This third law is about society’s information ecosystem. If one confident AI can mislead one person, imagine millions of them operating at once. Dr. Geoffrey Hinton, a pioneer of AI, recently warned that we may soon face a deluge of AI-generated content where “people will not be able to discern what is true”. Think about that: a world in which any email, article, or video could be a fluent fake. In such an environment, the very fabric of shared knowledge frays. The cultural impact is that our default trust in what we read and see (already fragile in the age of misinformation) takes another blow. We become either overly credulous – believing the confident outputs of machines – or cynically disbelieving of everything, unsure what or whom to trust. Both outcomes are destabilizing.

Philosophical and Ethical Reflections

Philosophers have long warned about the difference between appearance and reality, between knowing the truth and merely persuading others you know it. Socrates famously said that wisdom begins by admitting one’s own ignorance. Yet here we have built machines that cannot admit uncertainty – their entire training is to never say “I don’t know.” The ethical implications are stark: we have created a kind of universal “yes-man” that will opine on anything with equal poise.

Ethically, society must assert that truth and accuracy still matter, even if sleek AI outputs make falsehoods tempting to believe. We should recall an old piece of wisdom: with great power comes great responsibility. The power to generate confident language at scale must be matched with a responsibility to ensure it’s worthy of our confidence.

MORE FOR YOU

In practical terms, this means building AI with integrity – systems that can say “I’m not sure” when appropriate, that can provide evidence or cite sources for their answers, and that designers rigorously test for accuracy. It also means educating ourselves and our workforce to not be bedazzled by fluency. The human skill of critical thinking and verification is now more crucial than ever. Our collective philosophical stance should be that confidence is valuable only when earned by truth.

Confidence × Fluency × Verification = Trustworthiness

In the equation above lies a direction forward. By multiplying confidence and fluency with verification, we transform speciousness into something worthy of belief. In plainer terms, when we combine AI’s eloquence with real-world fact-checking and accountability, we get outputs we can actually trust. So, how do we do that?

The Way Forward: From Speciousness to Trust

Below are actionable solutions that can help ensure AI’s confident voice becomes a force for good rather than misinformation. This problem is not solved yet, but it can be addressed with concerted effort:

The Technology

Researchers have been working for years on ways to catch falsehoods as they happen, rather than after the fact. A good example comes from Microsoft’s Responsible AI team in a framework known as SLM Meets LLM. It’s a real-time pairing between a small language model (SLM) that quickly spots potential hallucinations and a large language model (LLM) that steps in to explain or verify them. Sort of like a micro-manager who is making sure everything is just right.

The SLM acts as the watchtower, scanning every statement the AI makes, and if it detects something questionable, it alerts the LLM, which serves as the analyst. The LLM then double-checks the claim against source material and either confirms the flag or filters it out. When the two disagree, the system now knows to pause, re-examine, and provide feedback to improve future checks.

Hallucination detection with LLM

SLM detects → LLM explains → feedback loop refines. This loop is central to today’s state of the art in “truth-aware” AI, balancing speed, interpretability, and real-time verification.

Despite this progress, the problem isn’t solved. Even with layered checks and categorized hallucination flags, models still produce confident errors that slip through.

Researchers have proposed several ongoing solutions:

→ Grounded verification: Cross-checking claims against trusted databases before final output.

→ Confidence calibration: Teaching models to indicate how sure they are instead of asserting every answer as fact.

→ Self-monitoring layers: Lightweight monitors that continuously scan the model’s own reasoning for contradictions.

→ User feedback loops: Allowing human correction to feed back into model learning without retraining from scratch.

These systems mark genuine progress, but none fully close the loop between confidence and truth. Because humans will always be in the picture, there will always be error. Absolutes are not attainable here.

The Human Solution

- Educate for AI Literacy: Governments, schools, and companies should launch awareness campaigns and training about AI’s limitations. Users must learn to treat AI outputs as proposals to be vetted, not truths to be swallowed wholesale. Critical thinking and “trust but verify” habits should be part of digital literacy curricula. In a world of confident AI, skepticism is a virtue, not a flaw.

- Promote a Culture of Verification: Finally, leaders in media, business, and politics must normalize the practice of verification. Citing sources, double-checking claims, and valuing accuracy over speed should be publicly rewarded. We should treat unverified AI content with the same caution we treat anonymous tips – interesting, but unproven.

In conclusion, this problem is not yet solved, not even close. AI has learned to perform confidence with stunning prowess, but now humanity must learn how to put authenticity back into the equation. The specious machine will not disappear, so it is on us to build the checks and cultural norms that reassert the primacy of truth. Confidence alone is never enough – not in people, and certainly not in machines.